The NSA Type 1 encryption program has a rich and complex history, shaped by evolving threats, technological advancements, and…

Shaza KhanApril 11, 2024

Read More

Blog

Bring on the edgeWe’ve moved beyond the cloud – the demands for near real-time insights outpace its inherent speed challenges. But more…

Mercury SystemsMarch 28, 2024

Read More

Blog

Intercepted communications: Encryption standards for the defense edgeEncryption is a critical component of data security, providing a reliable method for ensuring the security and…

Shaza KhanMarch 18, 2024

Read More

Blog

Advancing the frontiers of space science: The James Webb Space Telescope and next-generation observatoriesLarger payloads in space directly translate to the ability to design and deploy telescopes with larger apertures and more…

Vincent PribbleFebruary 2, 2024

Read MoreFeatured

Blog

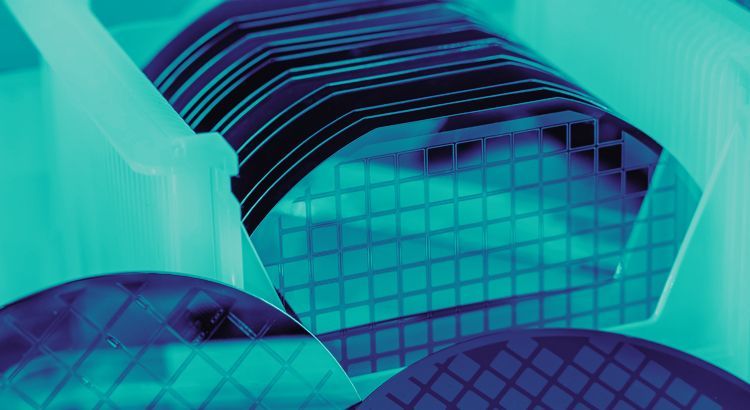

CHIPS Part 1: National security and the state of domestic chip manufacturingThe international chip-supply situation is putting U.S. security at risk and driving the need for the ...

March 8, 2023

Read More